How to Manage Concurrent API Requests in React: Best Practices and Strategies

Multiple requests are sent simultaneously (competing to render the same view), and it's assumed that the last request will complete last. In reality, the last request might finish first or fail, causing the first request to complete last.

This issue occurs more frequently than expected. In some applications, it can lead to serious problems, such as a user purchasing the wrong product or a doctor prescribing the incorrect medication.

Some common reasons include:

- Slow, unreliable, and unpredictable network with varying request latencies

- The backend being under heavy load, throttling requests, or experiencing a Denial-of-Service attack

- The user clicking quickly, traveling, or being in a rural area with poor connectivity

- Simple bad luck

Developers often don't encounter these issues during development, where network conditions are typically good, and the backend API may be running locally with near-zero latency.

In this post, I will demonstrate these problems using realistic network simulations and runnable demos. I’ll also discuss how to address these issues based on the libraries you are using.

Disclaimer: To focus on race conditions, the following code samples will not address React warnings

related to setState after unmounting.

The incriminated code:

You probably already read tutorials with the following code:

const StarwarsHero = ({ id }) => {

const [data, setData] = useState(null);

useEffect(() => {

setData(null);

fetchStarwarsHeroData(id).then(

result => setData(result),

e => console.warn('fetch failure', e),

);

}, [id]);

return {data ? data.name :

class StarwarsHero extends React.Component {

state = { data: null };

fetchData = id => {

fetchStarwarsHeroData(id).then(

result => setState({ data: result }),

e => console.warn('fetch failure', e),

);

};

componentDidMount() {

this.fetchData(this.props.id);

}

componentDidUpdate(prevProps) {

if (prevProps.id !== this.props.id) {

this.fetchData(this.props.id);

}

}

render() {

const { data } = this.state;

return {data ? data.name :

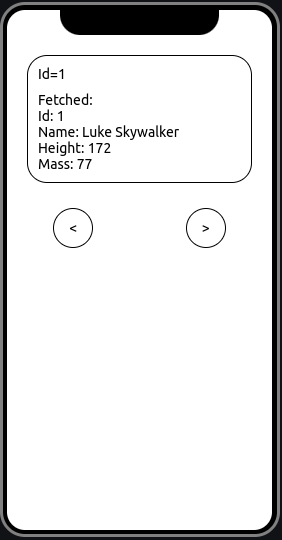

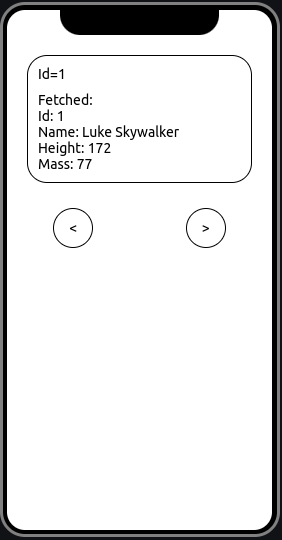

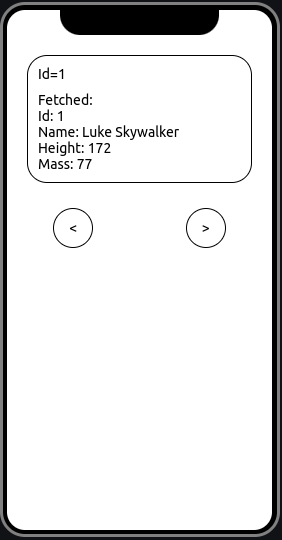

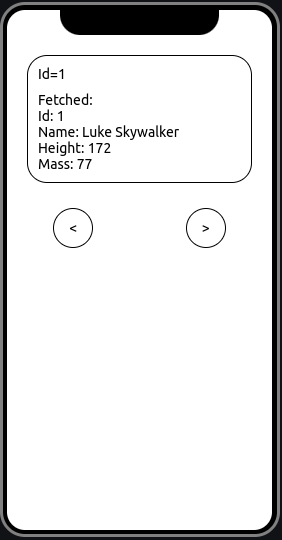

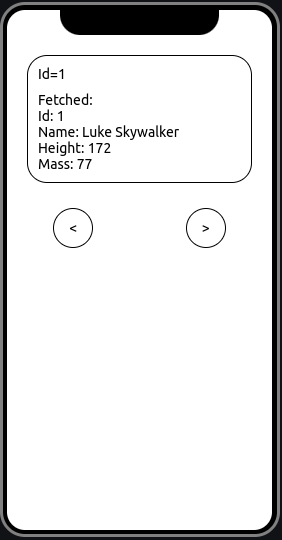

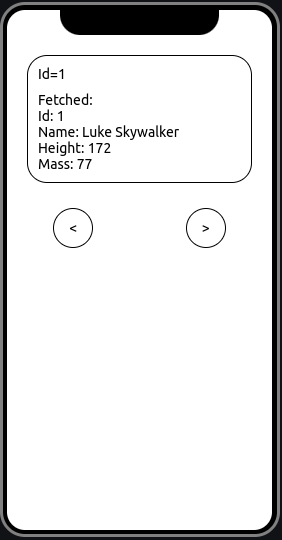

Here is a Star Wars Hero slider. Both of the versions above produce the same outcome.

Even with a fast API and a reliable home network, clicking the arrows very quickly can still expose problems. Remember, debouncing only reduces the chance of encountering issues, but doesn’t eliminate them.

Now, let’s examine what occurs when you're on a train traveling through tunnels.

Simulating bad network conditions

Let’s build some utils to simulate bad network conditions:

import { sample } from 'lodash';

// Will return a promise delayed by a random amount, picked in the delay array

const delayRandomly = () => {

const timeout = sample([0, 200, 500, 700, 1000, 3000]);

return new Promise(resolve =>

setTimeout(resolve, timeout),

);

};

// Will throw randomly with a 1/4 chance ratio

const throwRandomly = () => {

const shouldThrow = sample([true, false, false, false]);

if (shouldThrow) {

throw new Error('simulated async failure');

}

};

Adding network delays

You might be on a slow network, or the backend may take time to answer.

useEffect(() => {

setData(null);

fetchStarwarsHeroData(id)

.then(async data => {

await delayRandomly();

return data;

})

.then(

result => setData(result),

e => console.warn('fetch failure', e),

);

}, [id]);

Adding network delays + failures

You’re traveling on a train through the countryside, encountering several tunnels. This situation leads to random delays in requests, and some of them might even fail.

useEffect(() => {

setData(null);

fetchStarwarsHeroData(id)

.then(async data => {

await delayRandomly();

throwRandomly();

return data;

})

.then(

result => setData(result),

e => console.warn('fetch failure', e),

);

}, [id]);

As you can see, this code easily leads to weird, inconsistent UI states.

How to avoid this problem

Imagine three requests, R1, R2, and R3, are sent in that sequence and are still pending. The approach is to only process the response from R3, the most recent request.

There are several ways to achieve this:

- Disregard responses from previous API calls

- Terminate earlier API requests

- Combine both cancelling and ignoring previous requests

Ignoring responses from former api calls

Here is one possible implementation.

// A ref to store the last issued pending request

const lastPromise = useRef();

useEffect(() => {

setData(null);

// fire the api request

const currentPromise = fetchStarwarsHeroData(id).then(

async data => {

await delayRandomly();

throwRandomly();

return data;

},

);

// store the promise to the ref

lastPromise.current = currentPromise;

// handle the result with filtering

currentPromise.then(

result => {

if (currentPromise === lastPromise.current) {

setData(result);

}

},

e => {

if (currentPromise === lastPromise.current) {

console.warn('fetch failure', e);

}

},

);

}, [id]);

One might consider using the request ID to filter responses, but this approach has limitations. For instance, if a user clicks "next" and then "previous," it could result in two separate requests for the same hero. While this usually isn’t an issue (as the two requests often return identical data), using promise identity offers a more generic and portable solution.

Cancelling former api calls

It’s more efficient to cancel in-progress API requests. This allows the browser to skip parsing unnecessary responses

and reduces CPU and network usage. The fetch API supports request cancellation using

AbortSignal:

const abortController = new AbortController();

// fire the request, with an abort signal,

// which will permit premature abortion

fetch(`https://swapi.co/api/people/${id}/`, {

signal: abortController.signal,

});

// abort the request in-flight

// the request will be marked as "cancelled" in devtools

abortController.abort();

An abort signal functions like a small event emitter. When triggered using AbortController, it will

notify and cancel all requests associated with this signal.

Let’s explore how to utilize this feature to address race conditions:

// Store abort controller which will permit to abort

// the last issued request

const lastAbortController = useRef();

useEffect(() => {

setData(null);

// When a new request is going to be issued,

// the first thing to do is cancel the previous request

if (lastAbortController.current) {

lastAbortController.current.abort();

}

// Create new AbortController for the new request and store it in the ref

const currentAbortController = new AbortController();

lastAbortController.current = currentAbortController;

// Issue the new request, that may eventually be aborted

// by a subsequent request

const currentPromise = fetchStarwarsHeroData(id, {

signal: currentAbortController.signal,

}).then(async data => {

await delayRandomly();

throwRandomly();

return data;

});

currentPromise.then(

result => setData(result),

e => console.warn('fetch failure', e),

);

}, [id]);

This code may seem correct initially, but it’s still not entirely reliable.

Consider the following example:

const abortController = new AbortController();

fetch('/', { signal: abortController.signal }).then(

async response => {

await delayRandomly();

throwRandomly();

return response.json();

},

);

Abort the request during fetch, and the browser will handle it. However, if the abortion occurs while executing the

then() callback, you must manage it yourself. Aborting during an artificial delay will not cancel the

delay.

fetch('/', { signal: abortController.signal }).then(

async response => {

await delayRandomly();

throwRandomly();

const data = await response.json();

// Here you can decide to handle the abortion the way you want.

// Throwing or never resolving are valid options

if (abortController.signal.aborted) {

return new Promise(() => {});

}

return data;

},

);

Let’s revisit our issue. Here’s the final, secure solution: it cancels in-progress requests and uses this cancellation to filter the results. We’ll also utilize the hooks cleanup function, as suggested on Twitter, to simplify the code.

useEffect(() => {

setData(null);

// Create the current request's abort controller

const abortController = new AbortController();

// Issue the request

fetchStarwarsHeroData(id, {

signal: abortController.signal,

})

// Simulate some delay/errors

.then(async data => {

await delayRandomly();

throwRandomly();

return data;

})

// Set the result, if not aborted

.then(

result => {

// IMPORTANT: we still need to filter the results here,

// in case abortion happens during the delay.

// In real apps, abortion could happen when you are parsing the json,

// with code like "fetch().then(res => res.json())"

// but also any other async then() you execute after the fetch

if (abortController.signal.aborted) {

return;

}

setData(result);

},

e => console.warn('fetch failure', e),

);

// Trigger the abortion in useEffect's cleanup function

return () => {

abortController.abort();

};

}, [id]);

Now we are finally secure.

Using libraries

Handling all of this manually can be complex and prone to errors. Fortunately, there are libraries that can simplify this process. Let’s take a look at some commonly used libraries for loading data into React.

Redux

There are several methods to load data into a Redux store. Using Redux-saga or Redux-observable works well. For Redux-thunk, Redux-promise, and other middlewares, consider the "vanilla React/Promise" solutions in the following sections.

Redux-saga

Redux-saga offers several take methods, but takeLatest is commonly used as it helps guard

against race conditions.

- Starts a new saga for each action dispatched to the store that matches the pattern.

- Automatically cancels any previous saga task if it is still running.

function* loadStarwarsHeroSaga() {

yield* takeLatest(

'LOAD_STARWARS_HERO',

function* loadStarwarsHero({ payload }) {

try {

const hero = yield call(fetchStarwarsHero, [

payload.id,

]);

yield put({

type: 'LOAD_STARWARS_HERO_SUCCESS',

hero,

});

} catch (err) {

yield put({

type: 'LOAD_STARWARS_HERO_FAILURE',

err,

});

}

},

);

}

The previous executions of the loadStarwarsHero generator will be "cancelled." However, the underlying

API request itself won’t be truly cancelled (you need AbortSignal for that). Redux-saga will ensure that

only the success or error actions for the most recent request are dispatched to Redux. For handling in-flight request

cancellations, refer to this issue.

You can also choose to bypass this protection and use take or takeEvery instead.

Redux-observable

Similarly, Redux-observable (via RxJS) offers a solution called switchMap:

- The key difference between

switchMapand other flattening operators is its cancelling effect. - On each emission, the previous inner observable (from the function you provided) is cancelled, and the new observable is subscribed to.

- You can remember this by thinking of it as "switching to a new observable."

const loadStarwarsHeroEpic = action$ =>

action$.ofType('LOAD_STARWARS_HERO').switchMap(action =>

Observable.ajax(`http://data.com/${action.payload.id}`)

.map(hero => ({

type: 'LOAD_STARWARS_HERO_SUCCESS',

hero,

}))

.catch(err =>

Observable.of({

type: 'LOAD_STARWARS_HERO_FAILURE',

err,

}),

),

);

Other RxJS operators, such as mergeMap, can be used if you are familiar with their behavior. However,

many tutorials prefer switchMap as it is a safer default. Similar to Redux-saga, switchMap

does not cancel the in-flight request itself, but there are ways to add this functionality.

Apollo

Apollo handles GraphQL query variables so that each time the Star Wars hero ID changes, it fetches the correct data. Whether using HOC, render props, or hooks, Apollo ensures that requesting ID: 2 always returns data for the correct hero.

const data = useQuery(GET_STARWARS_HERO, {

variables: { id },

});

if (data) {

// This is always true, hopefully!

assert(data.id === id);

}

Vanilla React

There are various libraries for loading data into React components without relying on global state management.

I've developed react-async-hook, a lightweight library for handling async data in React components. It

offers excellent TypeScript support and safeguards against race conditions using the discussed techniques.

import { useAsync } from 'react-async-hook';

const fetchStarwarsHero = async id =>

(await fetch(

`https://swapi.co/api/people/${id}/`,

)).json();

const StarwarsHero = ({ id }) => {

const asyncHero = useAsync(fetchStarwarsHero, [id]);

return (

{asyncHero.loading && Loading}

{asyncHero.error && (

Error: {asyncHero.error.message}

)}

{asyncHero.result && (

Success!

Name: {asyncHero.result.name}

)}

);

};

Other libraries that offer protection include:

react-async: Similar toreact-async-hook, but uses a render props API.react-refetch: An older library based on HOCs.

There are many other libraries available, but I can’t guarantee their protection—check their implementations for details.

Note: react-async-hook and react-async might merge in the coming months.

Note: Using <StarwarsHero key={id} id={id} /> can force a component to remount

whenever the ID changes. This can be a simple workaround to ensure protection, though it might increase React’s

workload.

Vanilla promises and Javascript

If you’re working with vanilla promises and JavaScript, there are simple tools to prevent these issues.

These tools are also helpful for managing race conditions with thunks or promises in Redux.

Note: Some of these tools are low-level details of react-async-hook.

Cancellable Promises: An old React blog post explains that isMounted() is an

antipattern and provides methods to make promises cancellable to avoid the setState after unmount warning. While the

underlying API call isn’t cancelled, you can choose to ignore or reject the promise response.

I created the awesome-imperative-promise library to simplify this process:

import { createImperativePromise } from 'awesome-imperative-promise';

const id = 1;

const { promise, resolve, reject, cancel } = createImperativePromise(fetchStarwarsHero(id);

// will make the returned promise resolved manually

resolve({

id,

name: "R2D2"

});

// will make the returned promise rejected manually

reject(new Error("can't load Starwars hero"));

// will ensure the returned promise never resolves or reject

cancel();

Note: These methods must be applied before the API request resolves or rejects. Once a promise is resolved, it cannot be "unresolved."

To automatically handle only the result of the most recent async call, you can use the

awesome-only-resolves-last-promise library:

import { onlyResolvesLast } from 'awesome-only-resolves-last-promise';

const fetchStarwarsHeroLast = onlyResolvesLast(

fetchStarwarsHero,

);

const promise1 = fetchStarwarsHeroLast(1);

const promise2 = fetchStarwarsHeroLast(2);

const promise3 = fetchStarwarsHeroLast(3);

// promise1: won't resolve

// promise2: won't resolve

// promise3: WILL resolve

Conclusion

For your next React data loading scenario, I encourage you to properly manage race conditions.

Additionally, consider adding small delays to your API requests in the development environment. This can help you more easily spot potential race conditions and poor loading experiences. Making these delays mandatory might be safer than relying on developers to enable slow network options in devtools.

I hope you found this post helpful and informative. This is my very first technical blog post!

Please complete your information below to login.